有一个项目运行了一段时间之后,数据越来越大,有几张表数据达到四千多万,这个时候就考虑对这些大

数据表进行分表来加快数据的操作,OK,寻找可以作为分表的

KEY,最后找到了一个deviceId码(包含IMEI、MEID和ESN)这个码有个规律就是由数字和字母组合而成,原先想去deviceId的前六位进行

加法运算得到一个数字作为表的分别值,后来经过验证

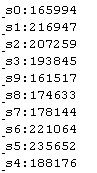

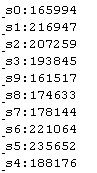

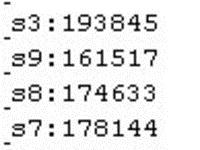

发现这三个码是有规律的,前面都各自有代表的意思,所以导致数据分出来之后有些表的数据多有些少,达不到预期想要的结果,只能考虑另外一种方法,最后决定用deviceId码的最后一位来作为分表的依据,因为最后一位是随机码来的,所以分布比较均匀。

200多万的测试数据,结果还是比较满意的。

找到了规律就可以开始分表了,这时候另一个问题迎面而来就是四千多万的数据怎么分到各个表里面去呢,这个可不是一件小事,而且项目在运行着。

方法1:

写了一个存储过程想一次想把一张表分离好,理论上是没有问题的

class="java" name="code">BEGIN

DECLARE v,num,total INT;

SET num=100000;

SET total=40000000;

SET v=1;

WHILE v<=CEILING(total/num) DO

CALL integral_log_analysis((v-1)*num+1,v*num);

SET v=v+1;

END WHILE;

END

integral_log_analysis这个存储过程是做分表操作,以为这样就可以很快完成,后来才知道这方法行不通,在本地运行两千万的数据一个晚上才执行完毕,这样是不可能让项目停这么久的时间的。

方法2:

考虑把数据导出来,然后在本地分离好再上传到服务器,这样项目就不用停那么久了。那首先就是把数据导出来,9G的数据导出来可不是一件容易的事,

mysqldump -uroot -pdbpasswd dbname test>db.sql;

这种方法可以导出一个表的结构和数据,但是导入到本地的却要很长的时间,执行了一个晚上都没执行完,最后只能放弃了。

方法3:

把数据按照预定的规则导出成文本,然后再导入到表,这种方法最后得到了验证,而且速度之快,大概半个小时就可以执行完毕。

Select userId,imei,fid,integral,0,type,insertTime From `integral_log` where lower(RIGHT(imei,1))='1' or lower(RIGHT(imei,1))='a' or lower(RIGHT(imei,1))='k' or lower(RIGHT(imei,1))='u' Into OutFile '/var/lib/mysql/integral_log_s1.txt';

Select userId,imei,fid,integral,0,type,insertTime From `integral_log` where lower(RIGHT(imei,1))='2' or lower(RIGHT(imei,1))='b' or lower(RIGHT(imei,1))='l' or lower(RIGHT(imei,1))='v' Into OutFile '/var/lib/mysql/integral_log_s2.txt';

Select userId,imei,fid,integral,0,type,insertTime From `integral_log` where lower(RIGHT(imei,1))='3' or lower(RIGHT(imei,1))='c' or lower(RIGHT(imei,1))='m' or lower(RIGHT(imei,1))='w' Into OutFile '/var/lib/mysql/integral_log_s3.txt';

Select userId,imei,fid,integral,0,type,insertTime From `integral_log` where lower(RIGHT(imei,1))='4' or lower(RIGHT(imei,1))='d' or lower(RIGHT(imei,1))='n' or lower(RIGHT(imei,1))='x' Into OutFile '/var/lib/mysql/integral_log_s4.txt';

Select userId,imei,fid,integral,0,type,insertTime From `integral_log` where lower(RIGHT(imei,1))='5' or lower(RIGHT(imei,1))='e' or lower(RIGHT(imei,1))='o' or lower(RIGHT(imei,1))='y' Into OutFile '/var/lib/mysql/integral_log_s5.txt';

Select userId,imei,fid,integral,0,type,insertTime From `integral_log` where lower(RIGHT(imei,1))='6' or lower(RIGHT(imei,1))='f' or lower(RIGHT(imei,1))='p' or lower(RIGHT(imei,1))='z' Into OutFile '/var/lib/mysql/integral_log_s6.txt';

Select userId,imei,fid,integral,0,type,insertTime From `integral_log` where lower(RIGHT(imei,1))='7' or lower(RIGHT(imei,1))='g' or lower(RIGHT(imei,1))='q' Into OutFile '/var/lib/mysql/integral_log_s7.txt';

Select userId,imei,fid,integral,0,type,insertTime From `integral_log` where lower(RIGHT(imei,1))='8' or lower(RIGHT(imei,1))='h' or lower(RIGHT(imei,1))='r' Into OutFile '/var/lib/mysql/integral_log_s8.txt';

Select userId,imei,fid,integral,0,type,insertTime From `integral_log` where lower(RIGHT(imei,1))='9' or lower(RIGHT(imei,1))='i' or lower(RIGHT(imei,1))='s' Into OutFile '/var/lib/mysql/integral_log_s9.txt';

Select userId,imei,fid,integral,0,type,insertTime From `integral_log` where lower(RIGHT(imei,1))='0' or lower(RIGHT(imei,1))='j' or lower(RIGHT(imei,1))='t' Into OutFile '/var/lib/mysql/integral_log_s0.txt';

load data local infile "/var/lib/mysql/integral_log_s0.txt" ignore into table integral_log_s0(userId,imei,fid,integral,activation,type,insertTime);

load data local infile "/var/lib/mysql/integral_log_s1.txt" ignore into table integral_log_s1(userId,imei,fid,integral,activation,type,insertTime);

load data local infile "/var/lib/mysql/integral_log_s2.txt" ignore into table integral_log_s2(userId,imei,fid,integral,activation,type,insertTime);

load data local infile "/var/lib/mysql/integral_log_s3.txt" ignore into table integral_log_s3(userId,imei,fid,integral,activation,type,insertTime);

load data local infile "/var/lib/mysql/integral_log_s4.txt" ignore into table integral_log_s4(userId,imei,fid,integral,activation,type,insertTime);

load data local infile "/var/lib/mysql/integral_log_s5.txt" ignore into table integral_log_s5(userId,imei,fid,integral,activation,type,insertTime);

load data local infile "/var/lib/mysql/integral_log_s6.txt" ignore into table integral_log_s6(userId,imei,fid,integral,activation,type,insertTime);

load data local infile "/var/lib/mysql/integral_log_s7.txt" ignore into table integral_log_s7(userId,imei,fid,integral,activation,type,insertTime);

load data local infile "/var/lib/mysql/integral_log_s8.txt" ignore into table integral_log_s8(userId,imei,fid,integral,activation,type,insertTime);

load data local infile "/var/lib/mysql/integral_log_s9.txt" ignore into table integral_log_s9(userId,imei,fid,integral,activation,type,insertTime);

必须注意的是:如果自增id不想弄到新表中,那么在select中列出要获取的字段,outfile后面是你想要导出文本的路径:

Select userId,imei,fid,integral,0,type,insertTime From `integral_log` where lower(RIGHT(imei,1))='0' or lower(RIGHT(imei,1))='j' or lower(RIGHT(imei,1))='t' Into OutFile '/var/lib/mysql/integral_log_s0.txt';

在插入数据的时候,如果有存在索引或者约束必须先去掉,这样速度才可以更快,弄完之后再做加上去,在load data local infile的时候必须注意也要把字段写上去,这样才不会产生字段对应

错误

load data local infile "/var/lib/mysql/integral_log_s0.txt" ignore into table integral_log_s0(userId,imei,fid,integral,activation,type,insertTime);

数据导完算是完成了一大半了,这个时候就是加上索引和约束,因为分表之后每个表还有400多万的数据,要每个表加上索引还是有些些痛苦的

刚开始测试用

create index index1 on integral_log_s0(imei, fid, type);

发现速度没有

ALTER TABLE integral_log_s0 add index index1(imei, fid, type);

快,所以用了下面的方法,执行了一下,一个表要40多分钟才执行完,太慢,影响用户访问,后来优化了一下mysql的配置my.cnf

[client]

#password = your_password

port = 3306

socket = /var/lib/mysql/mysql.sock

[mysqld]

port = 3306

socket = /var/lib/mysql/mysql.sock

datadir = /var/lib/mysql

log-error = /var/log/mysql/mysql-error.log

pid-file = /var/lib/mysql/mysql.pid

#skip-external-locking

skip-locking

skip-name-resolve

event_scheduler=ON

character-set-server = utf8

max_connections = 6000

max_connect_errors = 6000

wait_timeout=600

interactive_timeout=600

log-bin=mysql-bin

expire_logs_days=15

log_slave_updates = 1

binlog_cache_size = 4M

max_binlog_cache_size = 8M

max_binlog_size = 1G

binlog-ignore-db = mysql

binlog-ignore-db = test

binlog-ignore-db = information_schema

key_buffer_size = 384M

sort_buffer_size = 6M

read_buffer_size = 4M

read_rnd_buffer_size = 16M

join_buffer_size = 2M

thread_cache_size = 64

query_cache_size = 128M

query_cache_limit = 2M

query_cache_min_res_unit = 2K

thread_concurrency = 8

table_cache = 1024

table_open_cache = 512

open_files_limit = 10240

back_log = 450

external-locking = FALSE

max_allowed_packet = 16M

default-storage-engine = MyISAM

thread_stack = 256K

#transaction_isolation = READ-COMMITTED

tmp_table_size = 256M

max_heap_table_size = 512M

bulk_insert_buffer_size = 64M

myisam_sort_buffer_size = 64M

myisam_max_sort_file_size = 4G

myisam_repair_threads = 1

myisam_recover

long_query_time = 2

slow_query_log

#skip-networking

[mysqldump]

quick

max_allowed_packet = 16M

[mysql]

no-auto-rehash

# Remove the next comment character if you are not familiar with SQL

#safe-updates

[isamchk]

key_buffer = 128M

sort_buffer_size = 128M

read_buffer = 2M

write_buffer = 2M

[myisamchk]

key_buffer_size = 256M

sort_buffer_size = 256M

read_buffer = 2M

write_buffer = 2M

[mysqlhotcopy]

interactive-timeout

这样优化后,再执行添加索引,这些一个表只要2分钟就可以完成建立索引操作。这个时候终于完成了,我们分表的操作了,过程曲直,结果还是理想的。

- 大小: 10.3 KB